Reading List: Intersectional Data and Technology

Data shapes the world around us, from the policies that govern our lives to the algorithms curating our digital experiences. But data is never neutral. Every dataset carries the biases of those who collect, analyse, and interpret it.

Without an intersectional lens – a concept that considers how overlapping social categories like race, gender, class, and disability, create unique experiences of privilege and oppression – data-driven decisions risk reinforcing systemic inequities rather than dismantling them.

While intersectionality has become a key lens in social sciences fields, its application to data is both relatively nascent and urgent. If we do not take an intersectional approach to data, we risk perpetuating harm through biased algorithms, exclusionary research methods, and one-size-fits-all policies.

Intersectionality challenges the traditional, one-dimensional ways we categorise people. However, acknowledging intersectionality in data is complex. It requires disaggregating data, innovative methodologies, and a willingness to challenge dominant narratives.

The way we collect and interpret data can either uphold or dismantle injustice. Governments, tech companies, and researchers are starting to rethink their methodologies, but there’s still a long way to go. Inclusive data practices are essential to creating policies, programs, and technologies that serve everyone, not just the dominant group.

Intersectionality isn’t just a buzzword – it’s a crucial lens for ensuring data-driven solutions serve everyone, rather than reinforcing power imbalances. The future of data must be inclusive, intersectional, and responsive to the complexities of individual experiences.

Below is a curated reading list featuring books, articles, and resources that explore issues related to intersectionality and data feminism. This selection includes examples of data discrimination, harmful algorithms, and opportunities to create intersectional, safe digital spaces.

Reading List

The Afro Feminist Data Futures project explores how Feminist Movements in Africa use data to better understand how they can be empowered through the production, sharing, and use of gender data, and translated into actionable recommendations for private technology companies.

READ

FRIDA is a feminist fund run by young feminists to support and establish other emerging feminist organisations, collectives and movements. Noting the patriarchal nature of tech platforms, FRIDA is creating its own safe, autonomous, feminist digital spaces.

READ

The Consentful Tech Project raises awareness and shares skills and strategies to help people build and use technology consentfully. Consentful technologies are built with consent at their core, and support the self-determination of people who use and are affected by these technologies.

READ

The Feminist Principles of the Internet are a set of statements that together provide a framework for women’s movements to articulate and explore issues related to technology. They offer a gender and sexual rights lens on critical internet-related rights.

READ

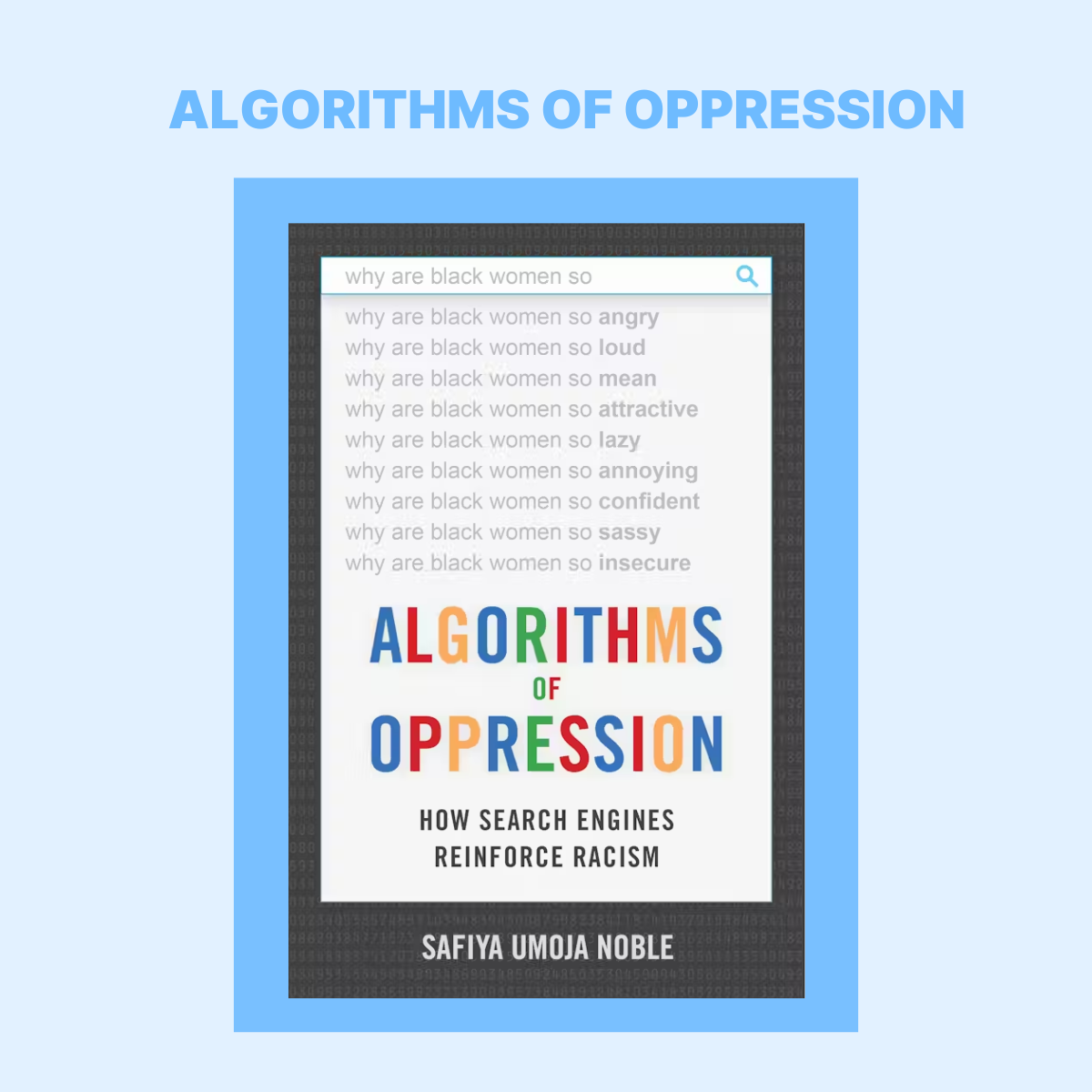

In Algorithms of Oppression, Safiya Umoja Noble critiques search engines like Google for bias, arguing that their algorithms, shaped by private interests and monopolies, lease to a biased set of algorithms privilege whiteness and marginalise people of colour, especially women.

READ

In Unmasking AI, Buolamwini applies an intersectional lens to both the tech industry and the research sector, to show how racism, sexism, colourism, and ableism can overlap and render broad swaths of humanity “excoded” and therefore vulnerable in a world rapidly adopting AI tools.

READ

The Cyberfeminism Index is a collection of resources for techno-critical works from 1990–2020, gathered and facilitated by Mindy Seu. In the Index, hackers, scholars, artists, and activists of all regions, races and sexual orientations consider how humans might reconstruct themselves by way of technology.

READ

In Race After Technology, Ruha Benjamin explores how emerging technologies can reinforce White supremacy and deepen social inequity. Benjamin argues that automation has the potential to hide, speed up, and deepen discrimination while appearing neutral and even benevolent when compared to the racism of a previous era.

READ

The narratives around big data and data science are overwhelmingly white, male, and techno-heroic. In Data Feminism, Catherine D’Ignazio and Lauren Klein present a new way of thinking about data science and data ethics informed by intersectional feminism. Data feminism is about power and how power differentials can be challenged and changed.

READ

Did you know that most facial recognition technology is trained only to recognise lighter skin tones? Meredith Broussard in More Than a Glitch explores how neutrality in tech is a myth and why algorithms need to be held accountable.

READ

In Consent to our Data Bodies lessons from feminist theories to enforce data protection, Paz Peña and Joana Varon explore how feminist views and theories on sexual consent can feed the data protection debate.

READ

In Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy, Cathy O’Neil reveals that mathematical models being used today are opaque, unregulated, and incontestable, even when they’re wrong. Most troubling, they reinforce discrimination, creating a toxic cocktail for democracy.

READ

Automated systems control which neighbourhoods get policed, which families get needed resources, and who is investigated for fraud. In Automating Inequality, Virginia Eubanks investigates the impacts of data mining, policy algorithms, and predictive risk models on poor and working-class people in the United States.

Read

What does fairness look like when computers shape decision-making? Can AI be created by and for low-income and communities of colour? A People’s Guide To Tech breaks down and contextualises artificial intelligence technologies within structural racism and discusses alternate futures for AI.

READ

In attempting to envision AI systems that are emancipatory and liberatory to all peoples, Principles of Afro-feminist AI Data, follows the afro-feminist approach in its inquiry and the propositions it puts forward. Afro-feminism seeks to create its own theories that are linked to the diversity of the realities of African women as a way of challenging all forms of domination they encounter particularly as they relate to patriarchy, race, sexuality and global imperialism.

READ

Comments